A Shard Theory of Everything

My favorite insanely ambitious explanation of learning and behavior

Shard Theory is an (insane ambitious) attempt to explain human and AI learning and behavior. The basic premise is that the way we act can be described as following learned context-dependent heuristics, or "shards".

I've always been a sucker for simple, grand, ambitious theories (Assembly Theory? defining art?). After about the fifth time giving my ten-minute spiel about why this is so awesome (and making the same joke about how unhelpfully vague the name is), I thought I might collect the explanation in a single place.

We can work through an example:

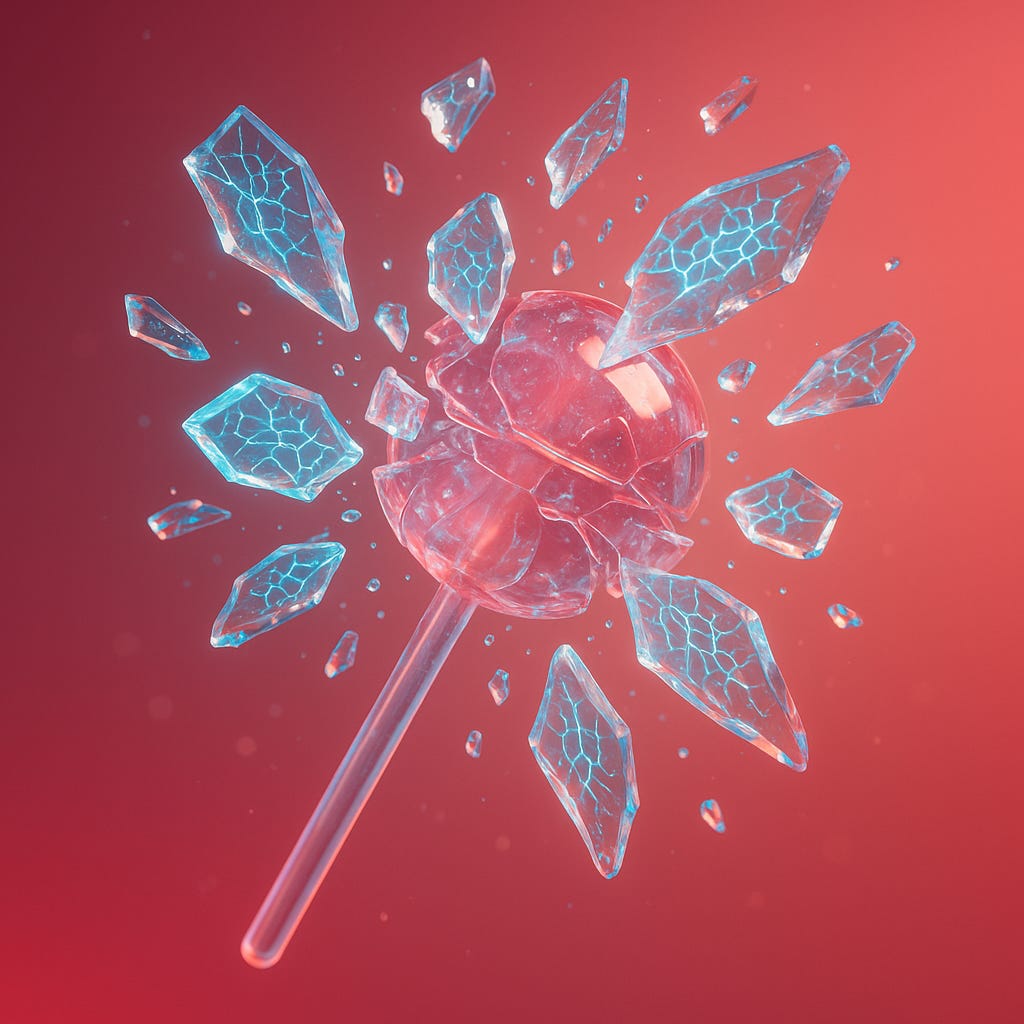

Say I'm a baby, and my dad puts a lollipop in my hands. I do a bunch of random actions, and then, by chance, one of those actions leads me to lick the lollipop.

Suddenly, I get a rush of endorphins in my brain, and the entire set of context and actions that lead up to me getting that sugar high are "rewarded" — detecting red pixels in my eyes, moving my hand towards my mouth, licking.

This creates a "lick lollipop shard" that activates when enough of those contextual cues and actions appear in my environment.

Let's break that down:

Learned: A simple RL algorithm (in our case, trained by evolution) tunes the parameters in our brain to reinforce quite complex behavior from a simple function (eg. the full range of movements for the baby wanting to get the lollipop, from a "sugar reward.")

Context-dependent: This "shard" is only activated when the context is sufficiently similar to the one in which the behavior was learned.

Heuristic: Of course, they will never be in the same situation again, but it just needs to be close enough.

It's got flavors of schema theory from psychology and ~some neuroscience grounding, but is ultimately an ML researcher's theory of people.

Shard theory provides a compelling mechanistic explanation for otherwise unintuitive observations, such as:

why third places are so good for focus, and why there's a sense of one's third place being "polluted" when you open a distracting app there, even once — reminded me of the forest metaphor mentioned in this fantastic video

why a change of environment acts a cure to depression (a full context switch)

why we know to stop ourselves from going to the candy aisle, because we know we "won't be able to help ourselves" if we're standing right there

nudge theory

"control theory", from Milgram's experiments

The Mind Illustrated’s model of meditation?

We can see some of the circuits in ML models too!

of consciousness?

One thought that occurred to me recently is that shard theory might explain the emergence of consciousness—that a coherent sense of self emerges as a useful heuristic developed by these simple reward functions.

Perhaps self-awareness, meta-self-awareness, and our subjective experiences emerged as generalized coordination mechanisms between competing shards.

This wouldn't answer the deep question of "what does it mean to be me" but would, I think, be progress.

Read more / other notes:

Nothing compares to reading original Shard Theory of Human Values post (you can see how much my explanation simplifies / gets wrong).

To be clear, I don't agree with the conclusion that the AGI is therefore easy to control, as others have claimed.

This is fun, but one key thing (as Ethan alludes to in another comment) is how does this differ from IFS/parts analysis, or other discretizations? Parts usually implies that the components are deeper, more comprehensive/coherent, and persistant than shards (parts are something closer to personas). Your point about emergence of conciousness also applies to IFS pretty well.

I'm sure there is probably some nice synthesis framing.

Sounds reasonable, but make sure not to overcount on the consciousness bit! That sort of functionality is also predicted by most theories, wouldn’t be much evidence for shard theory in particular.

Also, interactions between shards remain critically under-described! What even is “a” shard? This seems to imply a sort of discretization which needs to be justified. Why not a continuous blob of hive mind with some especially pointy regions, but broadly the same mush?